The 3D design industry is experiencing its most significant transformation since the shift from 2D to 3D. Generation technology has evolved from experimental novelty to production-ready tool, with global searches for "3D AI" surging 300% over the past five years. The 3D modeling market is growing 20% annually, driven primarily by advances in generation capabilities that dramatically reduce creation time while expanding creative possibilities.

This isn't about replacing designers—it's about amplifying their capabilities. Modern generation tools handle repetitive modeling tasks, explore design variations at scale, and transform rough concepts into refined 3D geometry. For designers, this means spending less time on technical execution and more time on creative decision-making, strategic thinking, and solving complex design challenges.

This guide explores how generation technology is reshaping 3D workflows, the practical applications transforming industries, and how platforms like Womp with Spark capabilities are making professional-quality generation accessible to everyone.

Generation technology in 3D design refers to systems that create three-dimensional geometry from various inputs—text descriptions, images, or even rough sketches. Unlike traditional modeling where designers manually define every edge and surface, generation systems interpret intent and produce geometry automatically.

The technology works through machine learning models trained on vast datasets of 3D objects. These systems learn patterns—how objects are structured, what makes geometry printable, how different materials and forms relate. When you provide input, the system references this learned knowledge to generate appropriate geometry matching your specifications.

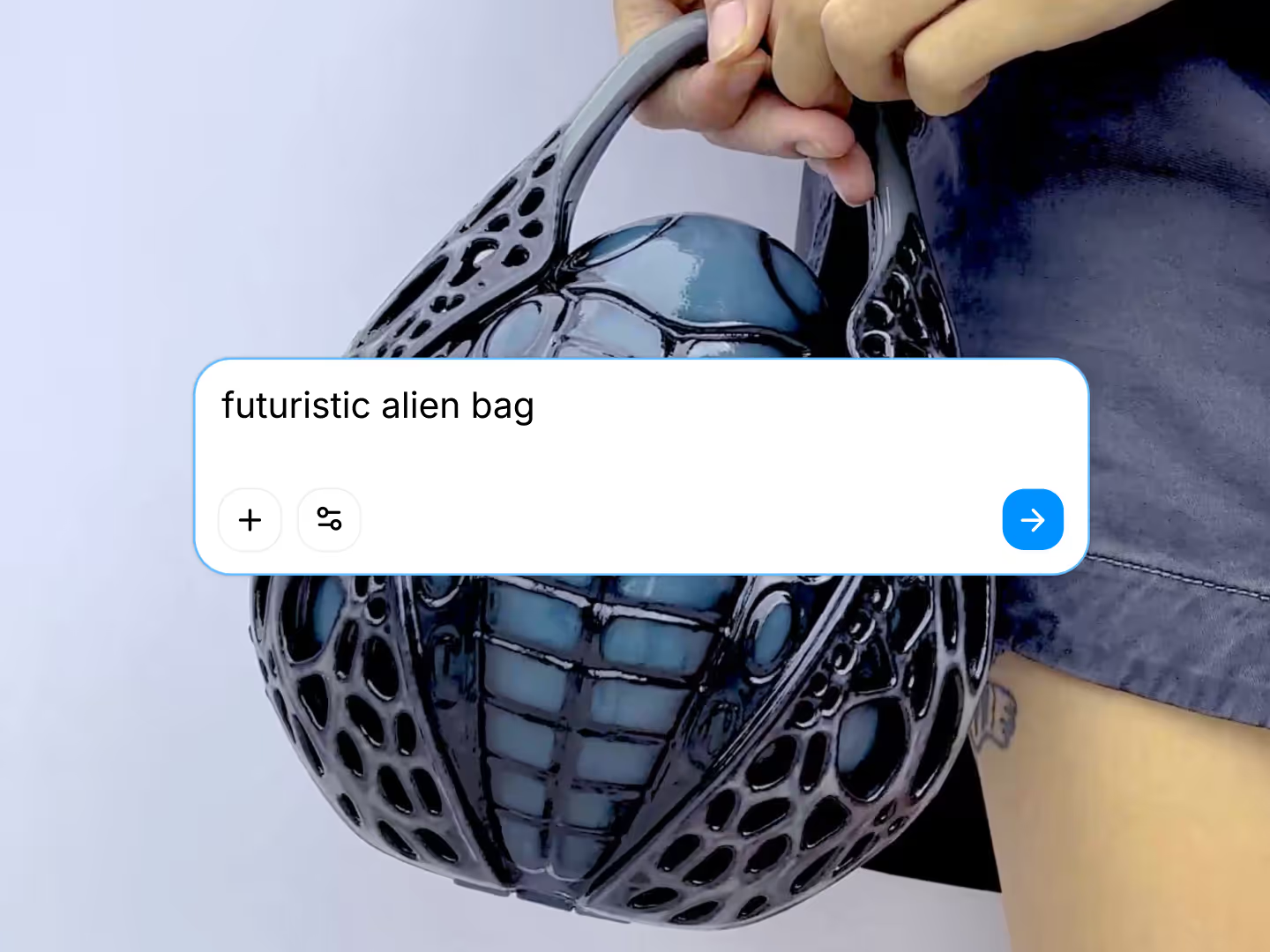

The most accessible form describes what you want in natural language. "Create a sports car with aggressive styling" or "design a coffee mug with geometric patterns." The system interprets your description and generates corresponding 3D geometry. This approach excels for rapid concept exploration where you're testing ideas rather than executing precise designs.

The key to effective text-to-3D generation is specificity. Vague descriptions like "make something cool" produce unpredictable results. Detailed prompts specifying form, style, proportions, and key features guide the system toward useful outputs. You're not replacing design thinking—you're communicating intent more efficiently than manually modeling would allow.

Often more predictable than text-based generation, image-to-3D analyzes reference images and constructs three-dimensional geometry matching the visual content. You provide a photo, sketch, or existing 2D artwork, and the system generates corresponding 3D forms.

This workflow is particularly powerful when combined with traditional image tools. Create or find the perfect visual reference through photos, illustration software, or image generation, then convert that validated 2D concept to 3D geometry. This two-step process often produces better results than direct text-to-3D because you validate the concept visually before committing to 3D conversion.

Modern generation systems don't just create abstract forms—they produce actual mesh geometry ready for use in 3D workflows. Generated models include proper topology, appropriate detail levels, and structures compatible with standard 3D operations like editing, texturing, and 3D printing.

The quality of generated meshes varies significantly across platforms. Some produce rough approximations requiring substantial cleanup. Professional systems generate clean topology, appropriate polygon density, and manifold geometry suitable for immediate use in production workflows or printing services.

Generation technology isn't theoretical—it's actively transforming how designers work across industries. The applications delivering immediate value focus on accelerating specific workflow bottlenecks rather than attempting to automate entire design processes.

The traditional concept phase involves sketching ideas, creating rough 3D blockouts, and iterating through alternatives to find promising directions. This process takes hours or days per concept. Generation technology compresses this to minutes, enabling designers to explore vastly more alternatives in the same timeframe.

For product development teams, this changes the economics of exploration. Instead of carefully selecting three concepts to develop based on sketches, you can generate twenty variations, evaluate them as actual 3D models, and invest refinement time on the most promising options. This increases the probability of finding optimal solutions by expanding the search space dramatically.

The key workflow is generate broadly, then refine selectively. Use generation to create many alternatives quickly. Evaluate them based on design criteria. Select winners for detailed refinement using traditional modeling techniques. This hybrid approach combines generation's breadth with human judgment and traditional tools' precision.

Visualization projects often require numerous supporting assets—furniture for architectural renders, props for product photography, environmental elements for scenes. Creating these secondary elements manually consumes time better spent on primary subjects.

Generation technology excels at creating these supporting assets. Need generic background furniture? Generate it. Require various container shapes for packaging studies? Generate a dozen options. Want environmental props to populate a scene? Generate them in minutes rather than hours of manual modeling.

This application doesn't require perfect results—it requires good enough fast. Supporting assets don't need the same refinement as hero products. Generation's speed advantage at creating adequate geometry for secondary elements frees designers to focus on primary subjects requiring expert attention.

Design decisions often benefit from testing multiple options. Should a product housing be rounded or angular? Which handle design feels better? What proportions resonate with users? Traditionally, creating test variations required modeling each alternative—time-consuming enough that teams often skip testing that would improve outcomes.

Generation enables cheap variation creation. Describe the alternative you want to test, generate it, and evaluate. Create multiple handle designs, various housing shapes, different proportional relationships. Test with users, stakeholders, or internal teams to gather data informing decisions. This evidence-based approach leads to better designs while reducing subjective debate.

For entrepreneurs launching products, this testing capability is particularly valuable. Limited resources often force gut-feel decisions. Generation's low cost per variation enables data-driven choices even on tight budgets, increasing the probability of market success.

Every designer faces moments when inspiration stalls. You know generally what's needed but can't visualize specifics. Traditional approaches involve sketching, browsing references, or discussing with colleagues—time-consuming processes that may or may not unlock creativity.

Generation provides another approach. Describe what you're trying to achieve, generate options, evaluate what works and what doesn't. Sometimes the results are directly usable. More often, they suggest directions you hadn't considered or highlight what you don't want, clarifying your vision through contrast. This creative dialogue accelerates the path from vague intent to concrete direction.

Understanding generation technology theoretically is useful, but practical value comes from implementation. Womp Spark demonstrates how generation capabilities integrate seamlessly into design platforms, making powerful technology accessible without complexity or technical barriers.

Rather than navigating complex parameter panels or learning specialized prompt syntax, Spark uses natural language. You ask for what you want in plain English: "create a modern coffee table with clean lines" or "generate a decorative vase with organic patterns." The system interprets your intent and produces appropriate geometry.

This conversational approach has significant implications for accessibility. Designers don't need to learn new interfaces or technical vocabularies. If you can describe what you want verbally, you can generate it. This removes expertise barriers, enabling more team members to contribute to concept development regardless of technical modeling skills.

The conversation maintains context, allowing iterative refinement through dialogue. "Make it taller," "add geometric details," "try a different material aesthetic"—each instruction refines previous generations without restarting from scratch. This natural iteration pattern mirrors how humans brainstorm and refine ideas collaboratively.

Spark provides flexibility in how you approach generation, recognizing that different projects benefit from different workflows. Text-to-3D for rapid concept generation. Image-to-3D when you have visual references or want to validate concepts as images first. Direct mesh generation when you need immediately usable geometry.

The recommended workflow combines these approaches. Generate images first, iterate until you achieve desired aesthetics, then convert winning concepts to 3D geometry. This two-step process leverages image generation's speed and predictability while ultimately delivering 3D models ready for refinement and production.

This workflow flexibility matters because real projects have varying requirements. Sometimes you need rapid 3D prototypes for form studies. Other times, careful image iteration followed by conversion produces better results. Spark adapts to your needs rather than forcing you into rigid workflows.

Generated models integrate immediately into Womp's design environment. Click "Add to Scene" and geometry appears in your workspace, ready for refinement using Womp's modeling tools. This seamless integration prevents workflow interruption—generation isn't isolated in a separate tool requiring export/import cycles.

The practical impact is maintaining creative momentum. When ideas flow, you generate concepts, import promising options, refine them, and continue designing without context switches breaking your creative state. This fluidity distinguishes integrated generation from standalone tools that, however powerful, disrupt workflow through constant tool switching.

Uniquely, Spark's generation systems are trained specifically for 3D printing compatibility. Generated models aren't just visually plausible—they're manifold geometry with appropriate wall thickness, proper dimensions, and structure suitable for SLA printing. This print-readiness eliminates common issues where generation produces visually interesting but unprintable geometry.

For designers creating physical products or prototypes, this matters enormously. You can generate a concept, click the 3D Print button, and order professional prints without manual geometry cleanup or repair. This complete workflow from idea to physical object in minutes represents the practical value of well-integrated generation technology.

Generation technology is powerful but not magical. Effective use requires understanding its capabilities, limitations, and optimal application patterns. Following proven practices accelerates learning and improves results.

Vague instructions produce unpredictable results. "Make something cool" could generate anything. Detailed descriptions guide the system toward useful outputs. Specify style, form characteristics, proportions, key features. "Create a modern desk lamp with a curved arm, circular base, and minimalist aesthetic."

Include relevant context about intended use. "Design a coffee mug handle ergonomic for right-hand use" produces different results than simply "create a mug handle." The system uses this context to make appropriate geometric decisions aligned with your actual requirements.

Think of prompts as design briefs. The more clearly you communicate requirements, the more likely the system generates useful results. This doesn't mean writing paragraphs—concise, specific descriptions typically work best—but it does mean moving beyond minimal generic descriptions.

First generations rarely produce perfect results. Treat them as starting points for refinement. Generate, evaluate, identify what works and what doesn't, and request modifications. "Make it wider," "reduce decorative detail," "change proportions." Each iteration moves closer to desired outcomes.

This iterative mindset differs from traditional modeling where you plan comprehensively before executing. With generation, rapid iteration is economical. Generate quickly, evaluate, adjust. The speed of generation enables exploration patterns impractical with manual modeling.

Don't expect first-try perfection. Budget time for 3-5 generation cycles per concept. This iteration expectation prevents frustration when initial results need refinement while allowing sufficient cycles to reach quality outcomes.

Generation excels at creating base geometry and exploring forms. Traditional modeling tools excel at precise refinement and functional detail. The most effective workflow combines both—use generation for speed, traditional tools for precision.

Generate overall forms, then use Womp's modeling tools to refine details, adjust proportions, add functional features. This hybrid approach delivers better results faster than either method alone. You capture generation's speed advantage while maintaining control over final quality through expert refinement.

When possible, generate images before converting to 3D. Images generate faster, iterate more predictably, and provide clearer preview of aesthetics than direct 3D generation. Perfect the visual concept as an image, then convert to 3D geometry.

This workflow particularly benefits organic or stylized designs where aesthetic correctness matters more than technical precision. Validate the look through fast image iteration, then create 3D geometry from the validated concept. This prevents wasting time refining 3D models based on unproven concepts.

Beyond technical capabilities, generation technology delivers measurable business value. Organizations adopting these tools report concrete improvements in productivity, cost efficiency, and competitive positioning.

Products reach market faster when design phases compress. Generation technology reduces concept exploration from weeks to days. This acceleration has compounding effects—shorter design cycles enable more iterations, leading to better products. Faster time-to-market captures opportunities before they close and generates revenue sooner.

For startups and small businesses, speed advantages are particularly valuable. Limited resources make every week count. Generation technology enables small teams to move with agility matching or exceeding larger competitors despite resource constraints.

Time savings translate directly to cost reductions. Designers spending hours on concept generation and asset creation instead complete these tasks in minutes, freeing capacity for higher-value activities. This efficiency improvement impacts project economics significantly when compounded across multiple projects.

The cost benefits extend beyond labor savings. Faster iteration cycles reduce the risk of expensive late-stage changes. Testing more concepts early increases the probability of optimal solutions, preventing costly redesigns after commitment to manufacturing or development.

Perhaps paradoxically, automation tools can improve creative quality by enabling more exploration. When concept generation is expensive, teams economize by exploring fewer options. Generation makes exploration cheap, enabling designers to evaluate many more alternatives and select the best rather than settling for first adequate option.

This increased exploration particularly benefits complex design challenges where optimal solutions aren't obvious. More alternatives tested means higher probability of finding superior solutions. The creative ceiling rises when economic constraints on exploration are removed.

Generation technology enables people with limited technical modeling skills to contribute meaningfully to design processes. Marketers can generate concepts for evaluation. Product managers can prototype ideas for discussion. Engineers can create visualization assets without depending on design specialists.

This democratization improves collaboration by giving diverse stakeholders tools to express and test ideas directly rather than through intermediaries and translations. Better communication across functions leads to better outcomes and reduces friction in cross-functional product development.

Generation technology in 3D design remains rapidly evolving. Current capabilities are impressive but represent early stages of what's coming. Understanding trajectory helps organizations plan technology adoption and capability development strategies.

Models are becoming more sophisticated, producing higher quality geometry with better understanding of complex prompts. Training on larger, more diverse datasets improves generalization—systems handle edge cases better and produce more reliable results across wider application ranges.

Integration deepens as generation capabilities embed more fully into design platforms. Rather than standalone tools requiring separate workflows, generation becomes native to design environments, accessed naturally alongside traditional modeling operations.

Specialization emerges as different models optimize for specific applications. Systems trained specifically for product design, architectural elements, character creation, or other domains produce better results than general-purpose models by understanding domain-specific requirements and conventions.

The trajectory points toward generation handling increasingly sophisticated design tasks while designers focus on strategic creative direction, design validation, and complex problem-solving. This partnership between human creativity and machine generation capability enables design outcomes neither could achieve alone.

Adopting generation technology doesn't require wholesale workflow changes or major commitments. Start small, prove value, and expand based on success.

Begin with supporting tasks rather than primary design work. Use generation for concept exploration, supporting assets, or variation creation. These applications deliver clear value with lower risk than immediately applying generation to critical design work.

Experiment with different approaches. Try text-to-3D for some projects, image-to-3D for others. Learn which workflows suit different project types. This experimentation builds intuition about effective generation use without commitment to specific methods.

Combine generation with traditional skills. Don't abandon modeling expertise—augment it with generation capabilities. The combination delivers better results than either approach alone. Use generation for speed, traditional tools for precision, and develop judgment about when each applies.

Integrate with production workflows. For physical products, ensure generated models are printable using services like Womp's integrated printing. Test complete workflows from generation through physical production to identify and address integration issues.

The question isn't whether generation technology will impact 3D design—it already has. The relevant question is how quickly designers and organizations adopt these capabilities and integrate them effectively into creative workflows.

The competitive implications are clear. Teams leveraging generation technology move faster, explore more alternatives, and deliver better outcomes than those relying solely on traditional methods. This advantage compounds over time as generation-enabled teams accumulate more learning cycles and optimization iterations.

For individual designers, generation technology is an opportunity rather than a threat. The technology handles tedious execution, freeing you for strategic creative work where human judgment and aesthetic sense provide irreplaceable value. Designers who embrace generation as a tool augmenting their capabilities will thrive in this evolving landscape.

The transformation is underway. Generation technology has moved from experimental to practical, from specialist tool to accessible capability. Platforms like Womp with Spark capabilities demonstrate how powerful generation features integrate into intuitive, accessible design environments.

The future of 3D design is generation-enhanced creativity—human designers providing vision and judgment, generation technology providing speed and exploration capability, together achieving outcomes neither could reach alone.

Ready to experience generation-powered design? Explore Womp Spark and discover how conversational generation creates professional 3D models from simple descriptions.